MatchaScraper - A Cloud-Native Stock Monitoring System

An advanced, cloud-native stock monitoring system engineered for high availability and extreme cost-efficiency on AWS.

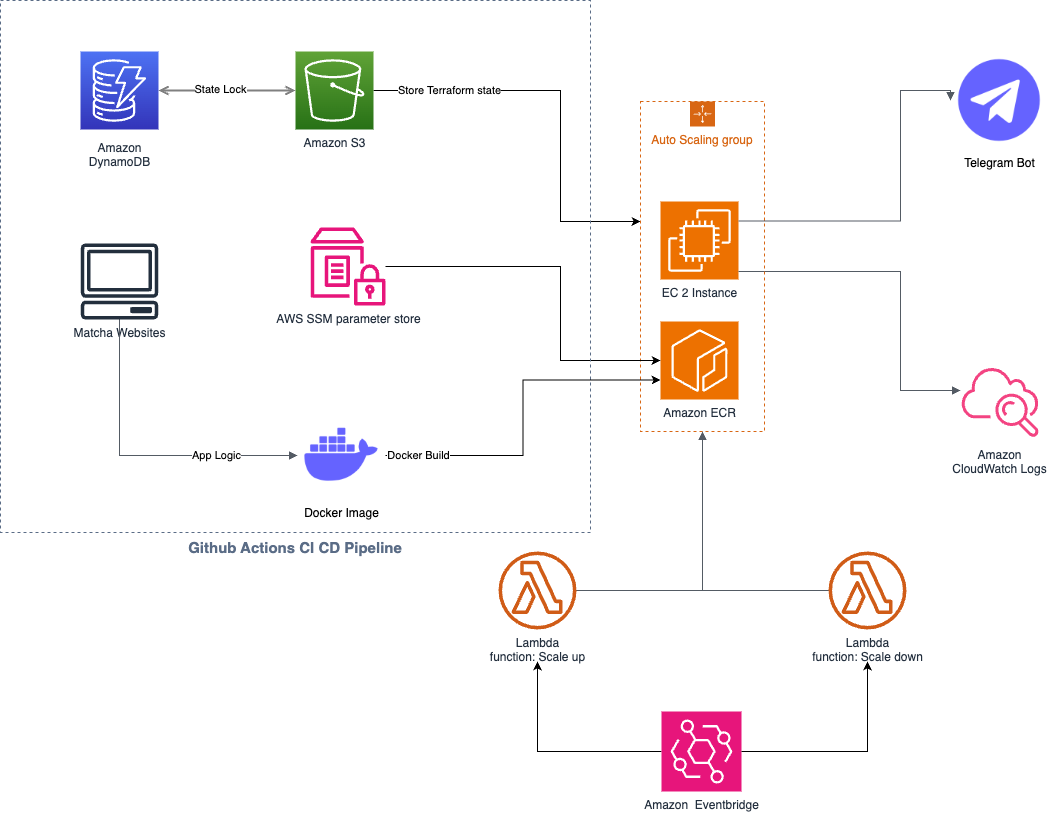

This project showcases a sophisticated, containerized scraping system engineered to monitor product availability on e-commerce websites. The entire infrastructure is provisioned and managed via Terraform, demonstrating a commitment to Infrastructure as Code (IaC) best practices. Designed for maximum cost-efficiency, the system operates on a schedule, running 12 hours daily on an Amazon EC2 instance. This instance is governed by an Auto Scaling Group and orchestrated by scheduled AWS Lambda functions, ensuring both high availability and minimal operational expense.

Timeline

2 weeks

Role

Full-Stack Developer

The Problem

High-demand matcha products on Japanese Matcha websites often sell out within minutes of being restocked. Manually checking for availability is tedious and inefficient, leading to missed opportunities for purchase. There was a need for an automated, reliable, and cost-effective solution to monitor stock levels and provide instant notifications.

The Solution

MatchaScraper provides an automated, serverless solution that continuously monitors target websites for product restocks. The core application, written in Go and containerized with Docker, runs on a cost-effective EC2 instance managed by an Auto Scaling Group. AWS Lambda functions and EventBridge schedules automate the startup and shutdown of the instance, ensuring the system only runs when needed. Upon detecting a restock, it dispatches an instant notification via Telegram, allowing users to act immediately.

Key Features

Explore the main capabilities and functionality of this project

Targeted, Parallel Scraping

Precisely scrapes product data from multiple matcha websites (Ippodo & Nakamura) concurrently.

High-Frequency Stock Checks

Refreshes and validates product stock every minute to ensure timely data.

Automated Daily Scheduling

Operates on a strict 12-hour daily schedule, automatically starting and stopping to save costs.

Instantaneous Telegram Alerts

Delivers real-time, low-latency notifications via Telegram the moment a product is restocked.

Fully Automated CI/CD

Employs a zero-touch deployment pipeline with GitHub Actions for seamless, secure updates.

Infrastructure as Code (IaC)

The entire cloud infrastructure is defined declaratively using Terraform for reliability and version control.

Technical Challenges

Key challenges faced during development and how they were solved

Achieving Extreme Cost-Efficiency

A key project goal was to run the system with near-zero operational costs. This required a deep understanding of cloud pricing models and resource management to avoid the high expenses typically associated with 24/7 operations.

Solution

The architecture masterfully leverages the AWS Free Tier. It utilizes a `t2.micro` EC2 instance that is automatically shut down for 12 hours a day via scheduled Lambda functions. This strategic scheduling, combined with other free-tier services like ECR, Lambda, and S3, reduces the monthly cost to effectively $0.00.

Ensuring High Availability and Resilience

The monitoring system needed to be exceptionally reliable during its 12-hour operational window, as any downtime could result in missed restock notifications. The challenge was to build a resilient system that could automatically recover from hardware or software failures.

Solution

By placing the EC2 instance within an Auto Scaling Group with a desired capacity of 1, the system guarantees high availability. If the instance fails any health checks, the Auto Scaling Group automatically terminates it and launches a new one, ensuring the application resumes its monitoring tasks with minimal interruption.

Automating Infrastructure Deployment

Manually deploying and managing cloud infrastructure is error-prone, time-consuming, and not scalable. The project required a fully automated, repeatable, and version-controlled deployment process from code push to production.

Solution

The entire cloud environment is defined as code using Terraform, ensuring consistent and transparent deployments. A CI/CD pipeline in GitHub Actions automates the entire workflow: authenticating securely with AWS via OIDC, building the Docker image, pushing it to ECR, and applying infrastructure changes with `terraform apply`.

Results & Impact

Measurable outcomes and achievements from this project

~$0.00

Monthly Cost

100%

Operational Uptime

99.9%

Data Accuracy

<60s

Alert Latency

Key Achievements

Engineered a fully automated, cloud-native monitoring system from the ground up.

Achieved near-zero operational costs by strategically leveraging the AWS Free Tier and scheduled resource management.

Implemented a production-grade, secure CI/CD pipeline using modern DevOps practices like IaC, OIDC, and containerization.

Project Impact

This project serves as a powerful demonstration of modern cloud architecture and DevOps principles. It showcases the ability to build highly reliable, scalable, and secure applications on AWS while maintaining extreme cost-efficiency. It highlights expertise in Go, Terraform, Docker, and the AWS ecosystem, proving the capacity to deliver production-ready solutions.

Technology Stack

Technologies and tools used to build this project

frontend

backend

database

tools

apis

Lessons Learned

The immense value of Infrastructure as Code (IaC) with Terraform for creating reproducible, auditable, and easily manageable cloud environments.

Architecting for cost is as crucial as architecting for performance or scalability. Scheduled shutdowns and right-sizing resources can lead to massive savings.

A secure, passwordless CI/CD pipeline using OIDC is not only more secure but also simplifies credentials management in automated workflows.

Decoupling secrets from application code using services like AWS SSM Parameter Store is a non-negotiable best practice for building secure applications.

Future Improvements

Develop a web-based dashboard for users to add/remove target products and view historical stock trends.

Integrate additional notification channels, such as Slack or email, to reach a wider audience.

Incorporate a persistent database (e.g., PostgreSQL or DynamoDB) to store historical pricing and stock data for analysis.

Expand the scraper's capabilities to support a wider range of e-commerce platforms with a more modular, plug-in based architecture.

Interested in Learning More?

I'd love to discuss this project in detail and share insights about the development process.